In many ways, the modern computer era began in the New Englander Motor Hotel in Greenwich, Connecticut.

In many ways, the modern computer era began in the New Englander Motor Hotel in Greenwich, Connecticut.

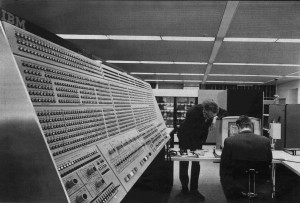

It was there in 1961 that a task force of top IBM engineers met in secret to figure out how to build the next-generation IBM computer.

A new design was sorely needed. IBM already sold a number of successful though entirely separate computer lines, but they were becoming increasingly difficult to maintain and update.

“IBM in a sense was collapsing under the weight of having to support these multiple incompatible product lines,” said Dag Spicer, chief content officer for the Computer History Museum, which maintains a digital archive on the creation and success of the System/360.

The system eventually became a huge success for the company — and a good thing too. IBM’s president at the time, Tom Watson, Jr., killed off other IBM computer lines and put the company’s full force behind the System/360. IBM’s revenue swelled to $8.3 billion by 1971, up from $3.6 billion in 1965. Through the 1970s, more than 70 percent of mainframes sold were IBM’s. By 1982, more than half of IBM’s revenue came from descendants of the System/360.

But its impact can be measured by more than just the success it brought to IBM.

“IBM was where everyone wanted to work,” said Margaret McCoey, an assistant professor of computer science at La Salle University in Philadelphia, who also debugged operating system code for Sperry/Univac System/360 clones in the late 1970s.

The System/360 ushered in a whole new way of thinking about designing and building computer systems, a perspective that seems so fundamental to us today that we may not realize it was rather radical 50 years ago.

Before the System/360 introduction, manufacturers built each new computer model from scratch. Sometimes machines were even built individually for each customer. Software designed to run on one machine would not work on other machines, even from the same manufacturer. The operating system for each computer had to be built from scratch as well.

The idea hatched at the Connecticut hotel was to have a unified family of computers, all under a single architecture.

Gene Amdahl was the chief architect for the system and Fred Brooks was the project leader.

Amdahl would later coin Amdahl’s Law, which, roughly stated, holds that any performance gains that come from breaking a computer task into parallel operations is offset by the additional overhead incurred by managing multiple threads. And Brooks would go on to write “The Mythical Man Month,” which asserted a similar idea that adding more people to a software development project can actually slow development of the software, due to the additional burden of managing the extra people.

The idea they came up with was to have a common architecture shared among the lower-end, less expensive machines and the priciest high-speed models. The top-end models would perform 40 times as fast as the low-end models. Keep in mind that applying the word “architecture” to the design of a computer was all but unheard of in the early 1960s.

But specifying an architecture, rather than a specific implementation, paved the way for compatibility amongst different models.

“In designing a system with both upward and downward compatibility for both scientific and business customers, IBM was attempting to use a single architecture to meet the needs of an unprecedentedly large segment of its customers,” according to a 1987 case study of the System/360 published by the Association for Computing Machinery. In fact, the “360” in the moniker was meant to indicate that the machine could serve all kinds of customers, small or large, business or scientific.

“The System/360 actually unified both business and computing threads in the market into one system,” Spicer said.

While the idea seems obvious today, the concept of a unified family of computers had profound consequences for both IBM, its customers and the industry as a whole.

IBM was able to use a single OS for all of its computers (though it ended up creating three variants to span the different use cases). A lot of work writing software for separate computers was eliminated, allowing engineers to concentrate on new applications instead.

IBM saved a lot of resources on the hardware as well. No longer would components, such as processors and memory, need to be designed for each machine. Now different models could share general-purpose components, allowing IBM to enjoy more economies of scale.

Customers benefited as well. They could take code written for one System/360 machine and run it on another. Not only could users move their System/360 code to a bigger machine without rewriting it, but they could port it to a smaller model as well.

When an organization bought a new computer in the early 1960s it “generally had to throw out all of its software, or at least rejigger it to work on the new hardware,” Spicer said. “There was no idea of having computers that could run compatible software over the generations.”

IBM has steadfastly maintained backward compatibility in the decades since. Programs for the original System/360s can still run, sometimes with only slight modification, on IBM mainframes today (which is not to say IBM hasn’t aggressively urged customers to upgrade to the latest models for performance improvements).

Compare that longevity to one of IBM’s largest competitors in the software market. This month, Microsoft ends support for its Windows XP OS after a mere decade since its release.

The System/360 and its successor System/370 continued to sell well into the 1970s, as punch cards were slowly replaced by IBM 3270 terminals, known as green screens.

Green screens changed the way the System360/370 could be used. Originally, they did batch processing, where a job was submitted via punch cards. The machine would churn through the data and return the results. The green screens paved the way for more interactive sessions with a machine, noted Greg Beedy, senior principal product manager at CA and a 45-year veteran of working on mainframes.

Beedy noted that the 3270 terminals were always 80 columns wide — equal to the number of columns on a punch card.

Even after the introduction of terminals, programming was a much more tedious job back in the 1970s; today, programmers have “instant gratification,” McCoey said.

“They hit the return key and up pops an answer. That never happened. We would put together a new unit and leave it for the overnight operators to run,” she said. “It would take them about 10 hours to run the test and they’d spend two or three hours to make sure everything ran correctly.”

Debugging back then involved reviewing a stack of papers with nothing but hexadecimal code. McCoey would have to transcribe the code back into the routines the original programmer had devised, and then try to locate the logical error in the code.

“For me, that was a lot of fun. It was like a puzzle,” McCoey said.

The programming world was also smaller then as well. Beedy started working on System/360 and similar systems in the mid-1970s, writing COBOL code for insurance companies.

“Back then, it was a tiny cult of people. Everybody knew each other, but the rest of the world didn’t know what we did. It was very arcane and obscure,” Beedy said. “Even the word ‘software’ was not that well-known. I told people I worked for a software company, they looked at me like I was crazy.”

Pat Toole, Sr., one of the original System/360 engineers and later an IBM division president, observed that there were no commercial enterprise software companies, such as an SAP or Oracle, in the mainframe days. IBM supplied a few standard programs for banks, but customers also wrote their own software and it was a big undertaking.

“It was a big deal if a company spent a fortune and two or three years to write a program for a banking application, then your hardware wouldn’t run it and you basically had to redo it all,” Toole said.

McCoey recalled how an insurance company she once worked for when she left Sperry would run the billing program on its mainframe for the Wanamaker’s department store.

“Twice a month, they would shut down their billing over the weekend and run all Wanamaker’s accounts, so Wanamaker’s didn’t have to employ their own IT department,” McCoey said.

Nonetheless businesses saw the value of System/360 and other mainframes.

“They not only allowed business to operate faster and gain competitive advantage but allowed them to have a lot more flexibility in their products and services. Instead of just having one standard product, you could have all these different pricing schemes,” Beedy said.

It was not until the emergence of lower-cost mini-computers in the late 1970s that IBM’s dominance in computer platforms started to fade, though the company caught the next wave of computers, PCs and servers, in the following decade. IBM has also managed to keep its mainframe business percolating.

Organizations continued to use mainframes for their core operations, if for no other reason than the cost of porting or rewriting their applications to run on other platforms would dwarf any savings they might enjoy from less expensive hardware, Spicer said,

“IBM, while it has been a big lumbering company at times, has adapted well over the years to keep the mainframe relevant. They managed to bring price/performance down to where mainframe computing stayed viable,” Beedy said. “Many times it has been announced that the mainframe was dead, replaced by minicomputers or servers, but what we’ve seen all along [is that these new technologies] extend what is already there, with the mainframe as the backbone.”