That’s hardly a new observation. It was most notably made in 2003, in a now-famous Harvard Business Review article by pundit Nicholas Carr called “IT Doesn’t Matter.” The gist of Carr’s argument: IT has become a commodity, like electricity. It’s no longer a sustainable differentiator (when was the last time you heard a company brag that it did a better job than a competitor because it had “better electricity”?).

That’s hardly a new observation. It was most notably made in 2003, in a now-famous Harvard Business Review article by pundit Nicholas Carr called “IT Doesn’t Matter.” The gist of Carr’s argument: IT has become a commodity, like electricity. It’s no longer a sustainable differentiator (when was the last time you heard a company brag that it did a better job than a competitor because it had “better electricity”?).

And, according to Carr, the management of IT is therefore withering into a simple discipline of risk-management and cost-optimisation. IT managers should no longer worry about delivering cutting-edge solutions that make their companies more effective — they should stick to the knitting of minimising costs and risks from services that are increasingly procured as outsourced (utility) services. In other words, IT organisations are effectively on the same level as janitorial services — necessary maintenance, but hardly innovators.

Predictably enough, Carr’s article launched plenty of debate.

On the one hand, the rise in outsourced, hosted, and cloud services would seem to indicate that Carr’s correct. Over 90% of IT organisations use some form of managed, hosted, or cloud services.

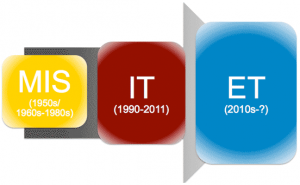

But here’s what Carr missed. Yes, IT — at least as we’ve known it for approximately 25 years — is indeed transforming. But far from becoming a commodity, technology is more important than ever. Businesses, schools, and governments desperately need tech-savvy managers who can innovate quickly, operationalise effectively, and keep their organisations competitive. In other words, IT is being replaced by “enterprise technology” (ET): Technology that’s no longer confined to offices and office workers, but is embedded throughout the enterprise.

ET, in a nutshell, is the combination of technologies that enables embedded, networked, intelligence. It includes a range of wireless/mobile technologies (from smartphones to wireless sensor networks); display and form-factor technologies (organic LEDs, miniaturisation, enhanced battery technologies); next-generation computing (such as quantum computing); and last but not least, so-called big data, which includes vastly improved data-mining and data-analytics capabilities that enable the enterprise to rapidly and efficiently sift through the increasing flow of real-time data to uncover actionable information.

From MIS to IT to ET

Some historical perspective: When computers were first introduced into businesses in the 1950s and 1960s, they revolutionised the back office. By automating repetitive processes, mainframe computers (and the software ecosystems that grew up around them) were able to accelerate data processing in ways that were, at the time, unheard of. Functions like accounting and payroll were revolutionised. The management of information services (MIS) became a specialised discipline focused on operationalising this technology: ensuring that systems were reliable and scalable and otherwise bulletproof.

It’s easy to forget how disruptive and transformative MIS was, at the time. Companies that “computerised” were able to uncover and leverage information ahead of their competitors. They required fewer back-office employees, and could therefore run leaner operations.

But as transformative as it was, the impact of MIS was limited. “Computers” — meaning mainframes, and mainframe-based applications — could only solve a subset of problems, specifically anything that could be handled in batch mode. They weren’t particularly good at sharing information, or at providing real-time responsiveness.

What happened next was a classic page out of the “Innovator’s Dilemma” playbook (referring to the seminal 1997 book by consultant and professor Clayton Christensen): MIS managers got caught up in getting better and better at managing their systems, and completely missed an equally transformational set of technologies that was then arising. Specifically, minicomputers and PCs had begun to hit the market. And although — like all disruptive technologies — they initially seemed to be slow and unreliable compared with “real” computers, they rapidly cropped up in businesses that were hungry for a way to get computing services without the delay and overhead that users had come to expect from the batch-mode mainframes. Along with PCs came networks: First LANs (remember Xerox Network Services and Novell Netware) and later, of course, the Internet.

The combination of desktop computing and networking proved explosive: Throughout the 1980s and 1990s, they revolutionised the day-to-day lives of an entirely new group of office workers — not the back-office employees in accounting and payroll (who had already experienced their revolution), but people that we call today “knowledge workers”. (Interestingly, the term was first used by management guru Peter Drucker in 1959.)

It’s worth dwelling on this point briefly. Throughout the 1950s, 1960s, and 1970s — the years in which MIS was exploding — the typical “knowledge worker” worked in an office, with a telephone on the desk, a filing cabinet (or several) for data storage, and a secretary (assuming the knowledge worker ranked highly enough to merit one) to handle communications needs. In other words, despite the fact that the company was becoming “computerised,” the knowledge worker’s tools were largely human or physical.

“Information technology” changed all that. By the end of the century, the typical knowledge worker no longer needed the filing cabinets or the secretary. He had a desktop (or possibly laptop) computer. Even the phone and the desk were rapidly becoming optional: With Internet connectivity and VoIP, the phone and computer could be the same device, and the knowledge worker could be anywhere.

And here’s the thing: By and large, the people who managed IT were not the same people who managed MIS. As noted earlier, while the MIS folks focused on improving and optimising their back-office systems, a whole new breed of technologist arose. These were the workgroup computing specialists, the LAN administrators, and later routing and Internet gurus.

These people were often hired by the business units directly — rather than by the MIS managers — and managed by the business units. Why? Because the power of these new technologies lay in their ability to deliver what the back-office computerisation hadn’t: to distribute information quickly and effectively among multiple workers (think email), and to provide real-time answers to computational problems (think Excel).

In other words, if MIS was all about optimising back-office functions, IT was all about empowering knowledge workers. And the business units wanted control over how and when their employees got access to these new technologies. More specifically, they didn’t want to wait for MIS to get around to addressing these technologies, because the MIS teams typically took far too long.

So business units began, in essence, operating their own technology groups — IT groups. With the tech boom of the 1990s continuing into 2000, most companies didn’t worry too much about the fact that they had two separate technology groups: back-office MIS and front-office IT. The goal at that point was to get all this new technology deployed as quickly as possible — not to manage it optimally.

But with the crash in 2001, all that changed. CIOs were under intense pressure to consolidate operations. When the dust settled, the battle was over, and IT had won: MIS and IT were consolidated into an IT department (or, in some cases, multiple IT departments, if an organisation were large enough to support it). But MIS didn’t run IT; IT ran MIS.

The same thing is happening now. With the rise of consumer technologies, business units (and individuals themselves) are bringing in technologies that are outside the purview of the traditional IT department. And improved miniaturisation, enhanced display capabilities, and most of all wireless and mobility are technology-enabling people and processes that had been until now relatively untouched by IT.

Just as IT brought “computerisation” to knowledge workers, ET is bringing computing and networking to the roughly 60% of employees who aren’t typical knowledge workers — and equally important to the nonhuman systems (like electrical grids and hospital equipment) that represent the core business functions of the organisation.

Just as IT ultimately subsumed MIS, ET will ultimately subsume IT.

Another way to look at all this is that the entire trajectory — from the 1950s onwards — represents a process of technology emerging from a sequence of ghettos (first the glass house, later office workers). For the next several decades, the technology revolution will be occurring not just within a subset of users, but across the entire enterprise

That’s the element Carr missed in his 2003 analysis: The emergence of ET.

What is ET?

What technologies, specifically, are we talking about? If mainframe computers and associated storage and applications were the hallmarks of MIS, and the PC, LAN, and the Internet of IT, what are the technologies that characterise ET?

There are several:

• Wireless and mobility, including both consumer-communications services (like the 4G suite of standards, LTE-Advanced, emerging WiFi/WiMax technologies such as 802.16m, and wireless-integration such as 802.21) and machine-to-machine (M2M) technologies such as M2MXML and initiatives such as TIA TR-50.

• Display technologies, particularly organic LEDS (OLEDS), which are LEDs that can be printed on paper (think of ordinary wallpaper becoming a video monitor at the flick of a switch — no need for that dedicated monitor on your desk).

• Battery technologies. Although totally new technologies remain far from deployment, several innovative advances hold the promise of greatly extending (meaning a 10x improvement) the life of traditional lithium-ion batteries. Researchers at Stanford and DuPont have developed such advances, and companies like startup Leyden Energy are bringing them to market.

• Sensor technologies. Sensors for a broad range of applications can be coupled to microprocessors and wireless networks to provide real-time information updates from a vast range of real-world systems.

• Quantum computing. Although most folks aren’t aware of it, there are upper limits to Moore’s Law (the observation that computing power roughly doubles every decade) based on current technology. Quantum computing, such as the breakthrough recently announced by IBM, can lead to exponentially increased computing power — leapfrogging Moore’s Law and ushering in an era of super smart, super small devices.

• Big data. Yes, it’s a buzzword, but the ability to rapidly analyse vast volumes of data (particularly streaming data) will result in the ability to respond and react in real time.

This all may sound somewhat hypothetical, so some examples:

With a combination of wireless networking, sensor technologies and big data, a global retail organisation could monitor, in real time, the physical movement of each individual product it carries in every store. Not only would this reduce shoplifting (a centralised management operations centre, in say, Cincinnati could alert a store in Sydney that a customer was about to make off with a product), but also it could provide the ability to respond in real time to unexpected behaviors. For example: customers in Dallas inspect, but not buy, the pink sweaters — but the store can’t keep the red ones in stock. Could this be the result of a previously-unnoticed quality flaw in the pink sweaters? Today, this kind of analysis can be done on a day-to-day, or week-to-week basis. Imagine the competitive advantage of being able to uncover and address such issues on a minute-by-minute basis.

Thanks to a combination of sensor technologies, wireless/mobility, and big data, hospitals are increasingly capturing a staggering amount of information about patients. One healthcare organisation recently advised Nemertes that roughly 50% of the devices on its IP network are medical monitoring devices — which means that, for example, an on-call physician can remotely log into a system and review a patient’s health (similar to the way that today’s sysadmins can check on the health of a virtual server). With appropriate big data tools, this information can be correlated and analysed in unprecedented ways: Does a particular type of heart irregularity often precede another, seemingly unrelated symptom in a particular class of patients, for instance athletic young men?

With a combination of wireless and mobility, sensor technologies, and big data, transportation and logistics companies are increasingly fine-tuning their ability to track and monitor ships, trucks and cargo. This allows companies to reduce energy costs and optimise travel routes, among other things.

The common denominator, again, is that these technologies are no longer merely “information systems” or “information technologies.” Information is a critical part of what they provide — but in the end, they deliver an unprecedented ability for enterprises to optimise, accelerate, and control core business functions.

Impact on tech pros

But if “technology” is now more than “information technology” — the same way that IT is more than just MIS — where does that leave IT professionals? Are they, as Carr suggested, destined to maintenance or support roles?

On the contrary. If Carr’s thesis had been entirely correct, the past decade would have seen a dramatic rise in the perception of IT as a “utility” function. Instead, the percentage of organisations that view IT as “strategic” has held relatively constant at nearly 40% over the past few years — and in 2011, increased to nearly 54%.

What are these strategic IT organisations doing? Increasingly, they’re being brought in to address technology issues that affect the entire company. Senior-level executives, including the board and the CEO, are increasingly recognising the expertise that IT brings to the table. These technologies seldom stand alone, and IT organisations that are strategic need to be able to transparently integrate and support standalone technologies.

In a nutshell, that expertise lies in the ability to operationalise innovation in a replicable way.

To “operationalise innovation” means, in essence, to take new ideas and technologies out of the labs (or from vendors, or employees, wherever they arise) and deploy them effectively across an organisation. That process requires several sub-processes, including:

• Uncovering new technologies (ideally ahead of the competition)

• Testing and prototyping them

• Deciding which technologies merit further investment

• Developing the selected technologies

• Integrating disparate technologies into a cohesive business solution

• Putting in place all the appropriate operational infrastructure that enables organisational deployment (training, revisions in business processes, reorganisation, etc.)

Finally, technologists need to do this in a replicable manner — which means not just lucking out once or twice, but having a methodology that is able to consistently ensure, year-in-year-out, that the company is integrating innovation.

What the shift from IT to ET really means for IT professionals, in other words, is that they need to become adept at this process. They need to become the go-to guys and gals who can take a good idea and make it happen. And that means rethinking everything from sourcing strategies and vendor relationship to governance, organisation, and training/recruiting.

Railroad companies, for example, are under enormous pressure to conform to Federal Positive Train Control (PTC) regulations, which require PTC system implementation by 2015. According to the Federal Railroad Administration, “PTC systems are comprised of digital data link communications networks, continuous and accurate positioning systems such as NDGPS, on-board computers with digitised maps on locomotives and maintenance-of-way equipment, in-cab displays, throttle-brake interfaces on locomotives, wayside interface units at switches and wayside detectors, and control centre computers and displays.” In other words, these systems require many of the ET examples discussed earlier (displays, sensors, wireless/mobile networks). And railroad companies are increasingly tapping their IT departments to work hand-in-hand with railroad operations staffers to deliver these systems.

In another example, hospitals are increasingly pulling together SWAT teams consisting of medical informatics (the big data specialists); clinical technologists (the group responsible for selecting, deploying, and managing medical equipment); information technology; and information security to meet increasing demands for secure, effective, online data-management.

This isn’t all easy, of course. IT professionals are often more comfortable working behind the scenes, rather than being called on to take responsibility for an organisation’s mission-critical initiatives. But as the cliché has it, the Chinese symbol for “crisis” is comprised of the combination of the symbols for “danger” and “opportunity.”

So yes, IT as we know it is over. But ET is just beginning.